A Web Crawler (also known as a spider or bot) is an automated program that systematically browses the internet to index web content. These programs methodically navigate through websites by following links recording information about each page they visit. Search engines like Google Bing and Yahoo use crawlers to build and maintain their search indexes ensuring users receive up-to-date results. Web crawlers operate by starting with a list of URLs to visit downloading and parsing those pages to find new links and repeating the process. For digital marketers understanding crawler behavior is crucial for SEO as it affects how and when content gets indexed. Webmasters can guide crawler behavior through robots.txt files XML sitemaps and specific meta tags. Modern crawlers have evolved to better understand JavaScript render complex web applications and prioritize mobile-first content.

Web Crawler

Trusted by:

OurServices

We offer a full suite of marketing solutions designed to elevate your brand and drive growth. From strategic branding and digital marketing to content creation and performance analytics, our services are tailored to meet your unique business goals and deliver measurable results.

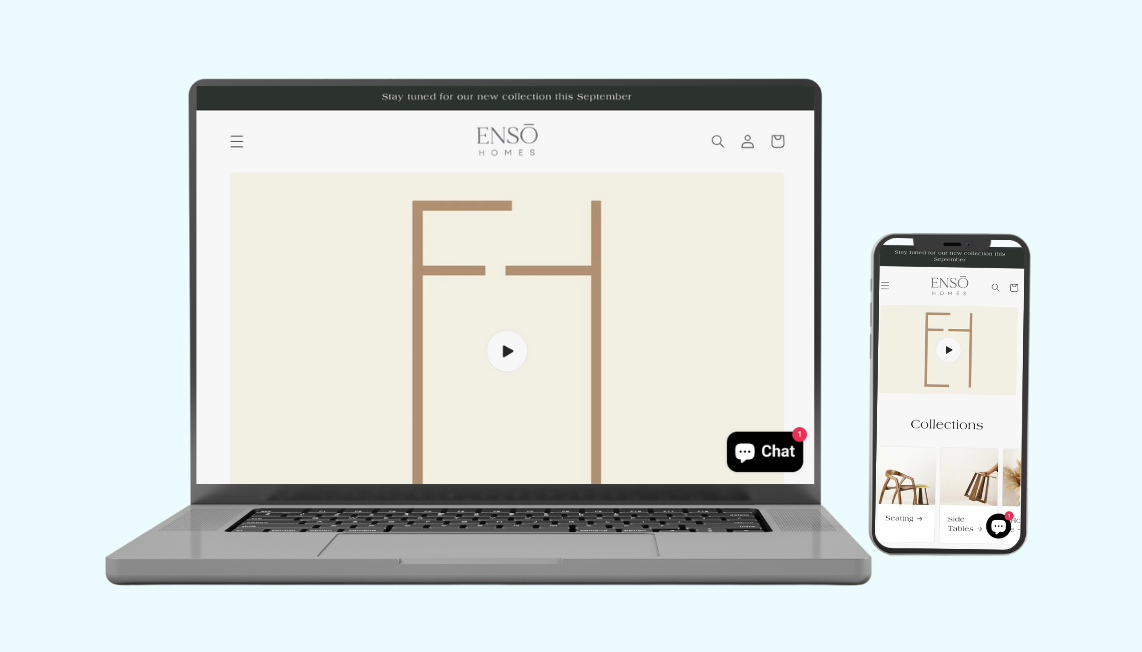

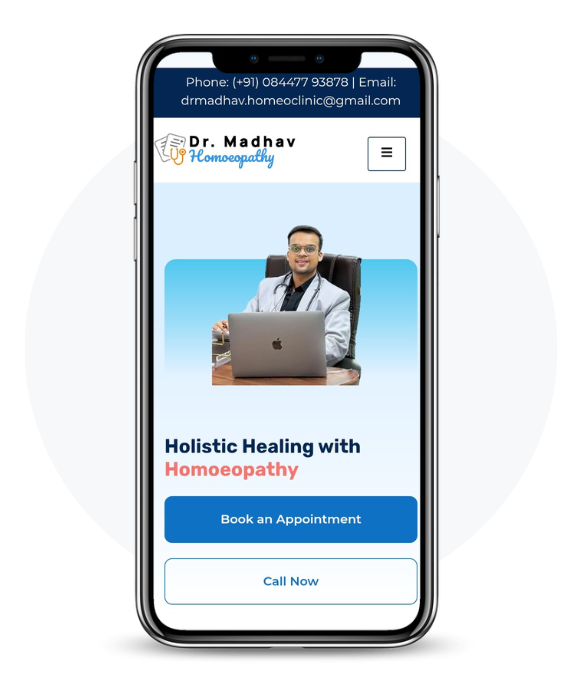

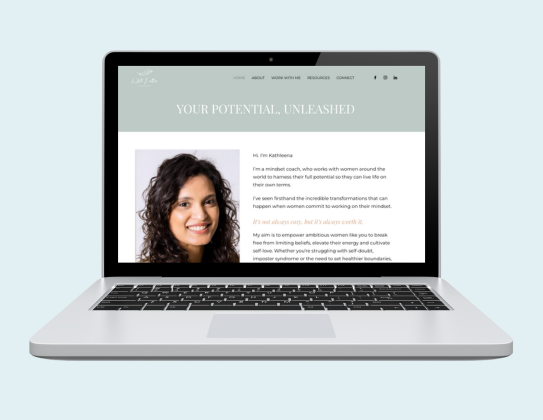

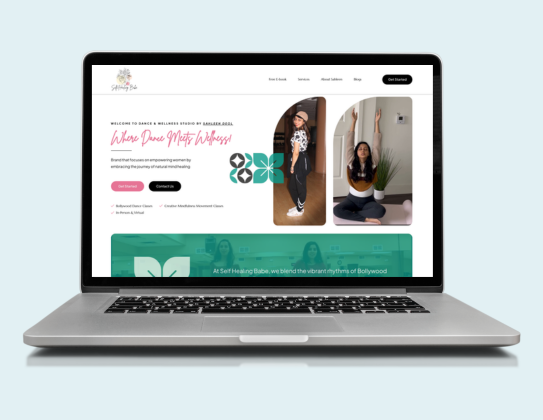

Web Development

We design and develop responsive, high-performing websites that captivate audiences and drive business growth, ensuring a seamless user experience across all devices.

Social Media Marketing

We create engaging social media campaigns that connect with your audience, build brand loyalty, and drive meaningful interactions across platforms.

Lead Generation

Our data-driven strategies help attract, engage, and convert high-quality leads, ensuring a steady stream of potential customers for your business.

Content Marketing

Crafting compelling content that informs, engages, and converts—our content marketing strategies help establish your brand as an industry leader.

Search Engine Optimization (SEO)

Boost your online visibility and outrank competitors with our proven SEO strategies, designed to improve search rankings and organic traffic.

Pay-Per-Click (PPC) Advertising

Maximize your ROI with targeted PPC campaigns that deliver instant visibility, attract the right audience, and drive immediate results.

Learn More →OurProgress

Driven by innovation and data, our journey is marked by continuous growth and success. We take pride in delivering measurable results, exceeding client expectations, and expanding our impact across industries and markets.

Сlients

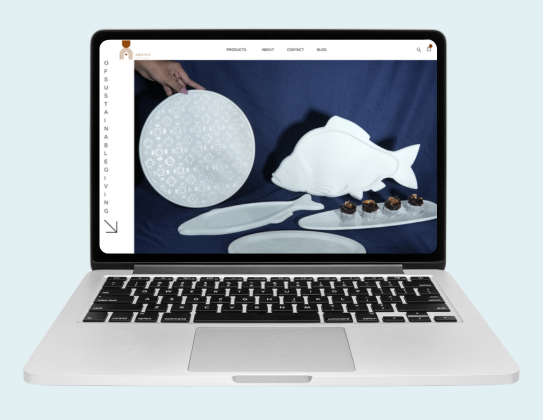

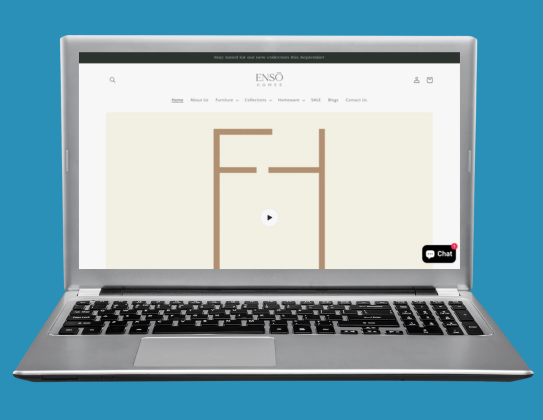

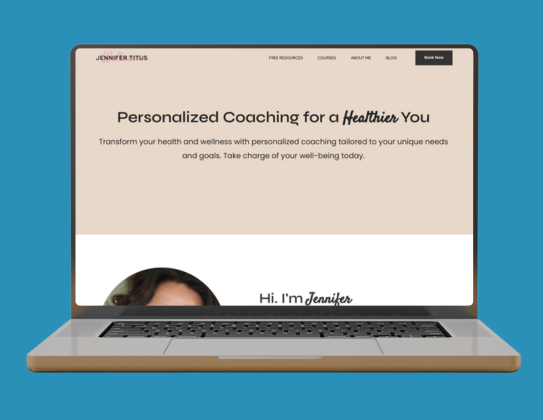

Websites Built

The best experience with the agency!

Working with Moving-Upward has been a game-changer for our business. Their expertise in digital marketing helped us achieve remarkable growth, increase brand awareness, and generate quality leads. The team’s dedication and strategic approach set them apart. Highly recommended!

Moving-Upward exceeded our expectations with their strategic approach. Their efforts helped us boost engagement and achieve significant business growth.

-

Thanks to Moving-Upward, our sales have skyrocketed. Their innovative marketing strategies and attention to detail have been invaluable to our success.

-

Partnering with Moving-Upward was the best decision for our brand. Their expertise in digital marketing helped us reach new heights effortlessly.

Michael Smith

-